Hmm—consider me intrigued:

Alloy is AI Prototyping built for Product Management:

➤ Capture your product from the browser in one click

➤ Chat to build your feature ideas in minutes

➤ Share a link with teammates and customers

➤ 30+ integrations for PM teams: Linear, Notion, Jira Product Discovery, and more

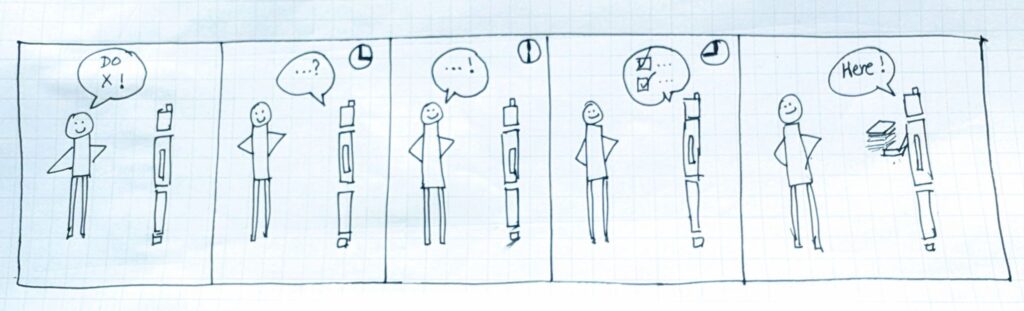

Check out the brief demo:

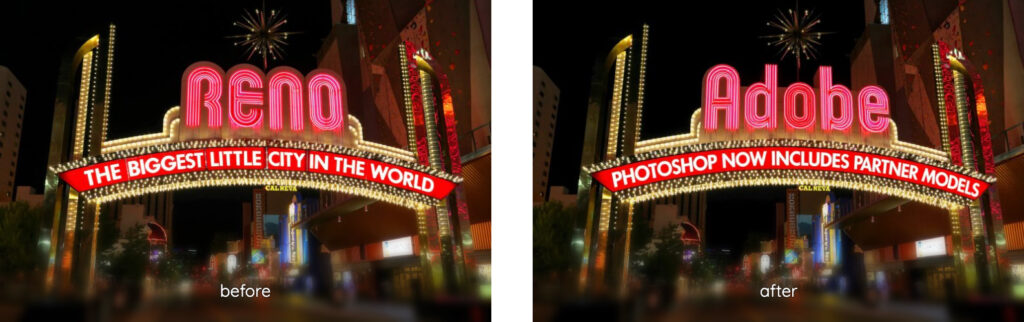

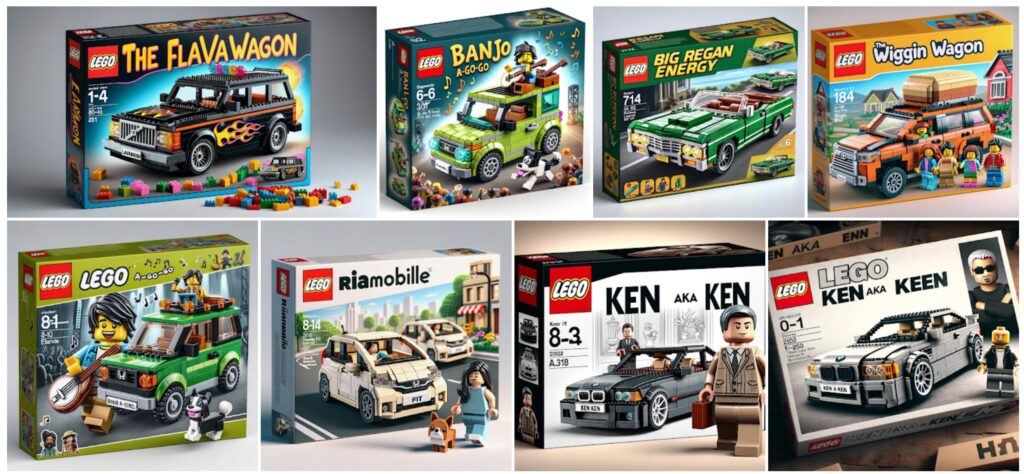

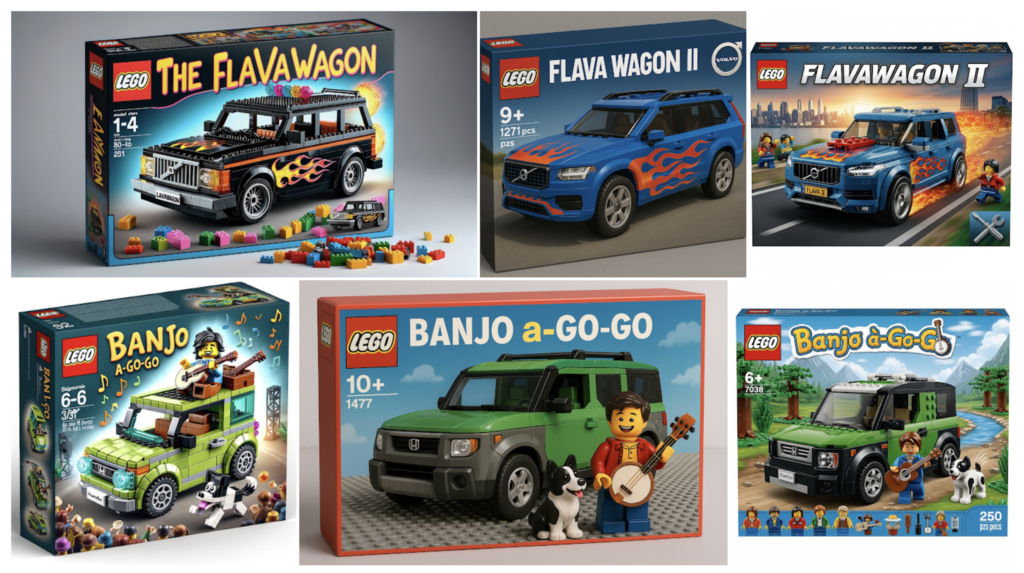

It’s official – I’m excited to introduce Alloy (@alloy_app), the world’s first tool for prototypes that look exactly like your product.

All year, PMs and designers have struggled with off-brand prototypes – built with “app builder” tools that look nothing like their existing… pic.twitter.com/DztKl2HtQg

— Simon Kubica (@simon_kubica) September 23, 2025