What the what?

Noodle writes,

A multiple-camera, 360-degree timelapse planetary panorama of the night sky by photographer Vincent Brady, with original score by musician Brandon McCoy.

[YouTube]

A couple of ex-Microsoft Research guys put together Levitagram, an admirably dirt-simple app for creating “levitation photos.” They say they’ve gotten half a million downloads and offer a couple of interesting details:

[YouTube]

108 different photographers captured bowlers, batsmen and fielders performing the same pre-decided action, with rather cool results:

Ad agency JWT writes,

The final TV spot includes 1,440 images stitched together from a bank of 225,001 crowd-sourced images of cricket crazy youth. The 1,440 seamless action images, captured by both cricket crazy youth and the 108 photographers, were chosen and stitched together to complete one action of the journey of one ball from bowler to batsman to fielder to keeper.

[YouTube] [Via Ben Jones]

Jos Stiglingh used a DJI Phantom 2 & a GoPro Hero 3 to capture this unique perspective:

[YouTube]

I’ll bet this thing is super effective at driving girls away & recording their flight:

Lost due to equipment malfunction: The part where the Cobra Kais jump this dude & throw him into a drainage culvert.

[YouTube]

Spredfast tested the last 100,000 images that included the #nofliter hashtag to get a sample from the platform… Turns out that 11% of photos using the #nofilter hashtag on Instagram actually have a filter, a percentage that adds up to roughly 8.6 million photos.

Conversely, a young coworker of mine mentions that she sometimes tags photos with “#vsco” so they’ll draw more views, even when she hasn’t used VSCO tools to edit them.

Some people want to project the authenticity they believe comes with being filter-free, and others want membership in the cool-kid photography club (regardless of tool choice).

Another curious bit of single-serving sociology:

In the spirit of the video of strangers kissing for the first time, a filmmaker got 40 of his acquaintances together and had them to slap each other in the face.

My favorite part of playing Doc in West Side Story was, night after night, cracking my slightly pretentious friend in the mouth.

[YouTube] [Via Bridgette W]

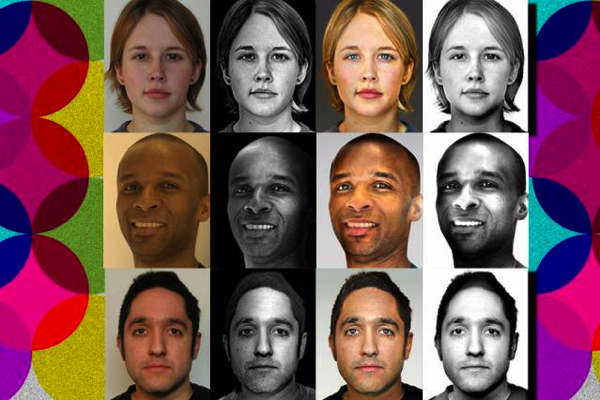

Interesting work from Esther Honig:

Photoshop has become a symbol of our society’s unobtainable standards for beauty. My project, Before & After, examines how these standards vary across cultures on a global level. […]

With a cost ranging from five to thirty dollars, and the hope that each designer will pull from their personal and cultural constructs of beauty to enhance my unaltered image, all I request is that they ‘make me beautiful’.

Below is a selection from the resulting images thus far. They are intriguing and insightful in their own right; each one is a reflection of both the personal and cultural concepts of beauty that pertain to their creator.

Click through to her site to see the full set.

[Via]

I joined Scott Kelby & Matt Kloskowski on The Grid yesterday, starting the product demo around 12:22, getting to the new edit list at 13:55, and talking about how computer vision enables applying interesting, editable looks around 17:00.

What do you think? Where should we go from here?

(Side note/business opportunity for you: I’ll pay good money for a human bark collar that zaps me every time I say “ah.” Good lord… I will fix that, period.)

[YouTube]

Fantastic images from Krisztian Birinyi. Click through to Colossal for more. You can buy prints on 500px.

Google’s been talking about non-destructive, mobile/Web photography for a long time, but until now the benefit has been mostly theoretical: You could apply edits to your images, but you didn’t have an interface for adjusting edits or moving them among images.

Until now.

Check out the newly upgraded G+ editor (i.e. Snapseed for Mac/Windows in all but name) shows the adjustments applied to your image, letting you adjust each one, delete them, and copy them from image to image.

To use the new feature:

The other interesting thing is that we’re starting to analyze images & then apply editable sets of adjustments. To start we’re detecting certain landscape & urban shots, then applying an interesting combination of blurs, HDR effects, and frames. I think that the combination of computer vision (being able to identify & classify image content) + application of style + editability is really promising.

Enjoy, and please let us know what you think!

…without losing any files or visual quality. 1.5GB of storage is now down to 500MB.

Honestly I’m thinking the misleading “lossy” option should be called “visually lossless,” because as I demonstrated the other day, there’s almost zero chance you’ll ever be able to perceive a difference between this & the lossless compression option. (You’d have to crank up a very dark photo by more than 4 exposure stops.)

Pass it on.

Wow—there is literally no way this could end badly. PetaPixel writes,

Pic Nix is a free online service that allows you to subtly and anonymously call out your friends for committing the most heinous of Instagram crimes. Created by ad agency Allen & Gerritsen, using Pic Nix is simple: just enter the name of the offender, choose from their list of 16 offenses, select one of the pre-written captions and submit your request.

[Vimeo]

…and replace them with lossy DNG proxies? Would I ever see a visual difference?

A) Yes. B) No.

So, a little background:

What I’ve always wondered—but somehow never got around to testing—is whether I’d be able to see any visual differences between original & proxy images. In short, no.

Here’s how I tested:

I repeated the experiment with other images, including some with subtle gradients (e.g. a moonrise at sunset). The results were the same: unless I was being pretty pathological, I couldn’t detect any visual differences at all.

I did find one case where I could see a difference between the lossy & lossless versions: My colleague Ronald Wotzlaw shot a picture of the moon, and if I opened up the exposure by more than 4 stops, I could see a difference (screenshot). For +4 stops or less, I couldn’t see any difference. Here’s the original NEF & the DNG copies (lossless, lossy) if you’d like to try the experiment yourself.

No doubt a lot of photographers will tune out these findings: “Raw is raw, lossless is lossless, the end.” Fine, though I’m bugged by some photogs’ fetishistic, gear-porn qualities (the kind of guys who insist on getting a giant lens & an offsetting full-frame camera) & old-wives’ mentalities (“You can’t reformat your memory card with your computer: this one time, in 2003, my buddy tried it and it made his house burn down…”).

So, to each his or her own. As for me, I’m really, really encouraged by these findings, and I plan to start batch-converting my DNGs to be “lossy” (a great misnomer, it seems).

——

Nerdy footnote: Zalman Stern spent many years building Camera Raw & now works with me on Google Photos. He’s added a bit more detail about how things work:

“Downsampling” is reducing the number of pixels. Reducing the bit-depth is “quantizing.” The quantization is done in a perceptual space, which results in less visible loss than doing quantization in a linear space. Raw data of the sensor is linear where the data going into a JPEG has a perceptual curve applied. (“Gamma” and sRGB tone curves are examples of the general thing around perceptual curves.)

Dynamic range should be preserved and some small amount of quantization error is introduced. (Spatial compression artifacts, as in normal JPEG, are a different form of quantization error. That happens with proxies too.) Quantization error is interesting in that if it is done without patterning, it takes a very large amount of it to be visible.

The place you’d look for errors with lossy raw technology are things like noise in the shadows and patterning via color casts in highlights after a correction. That is the quantization error gets magnified and somehow ends up happening differently for different colors.

In an interview on Vimeo, the filmmaker looks behind the scenes of making a crazy multi-phone archway to capture NYC street life:

[Vimeo]

From 14-year-old dropout to mountain adventurer to NatGeo cover photographer, Cory Richards gives a lightning tour of his life while meditating on how photography connects us. PopPhoto notes,

There are not many people who would survive a deadly avalanche, and then get up and keep climbing. There are fewer still who would keep taking photos. But that’s exactly what Richards did, and what part of what makes his photography so impressive.

You can read more in an interview with Cory on NatGeo’s site.

[Vimeo]

Treat yourself to five minutes of gorgeous aerial photography from Thailand courtesy of Philip Bloom together with After Effects (lens correction), Colorista (color correction), a Phantom 2 drone (featuring prop guards while buzzing young children), and a GoPro. (More info is in Philip’s blog post.)

[Vimeo]

Looks like a neat way to differentiate in- vs. out-of-focus regions.

[YouTube] [Via Aravind Krishnaswamy]

Good, solid tips from Terry White:

- Your Lightroom Catalogs can be stored anywhere. This means that, so long as you know you’ll have Internet, they could technically be stored on Dropbox, Google Drive, Creative Cloud, or the upcoming iCloud Drive to make for easy syncing across computers.

- “Automatically write changes to XMP” preference makes sure that edits in Lightroom carry over to other Adobe programs such as Photoshop and Bridge.

- “Optimize Catalog” command removed unused caches to speed up catalog performance.

- Advice on how to make the most of Adobe’s impressive Smart Previews feature.

- Move media across your drive directory using only Lightroom, not something such as Windows Explorer or Finder, both of which can leave data behind.

[YouTube]

Reminds me of one or two blowhard-y pro photographers I’ve met. 😉

[YouTube] [Via Franck Payen]

I’m delighted to say that we’ve rewritten the Snapseed editing pipeline from the ground up, making it non-destructive & setting the stage for a really exciting future. Just yesterday it arrived on iOS inside the new Google+ app (which, by the way, offers to back up all your photos & videos for free). Engineer Todd Bogdan writes,

Easily perfect your photos with a powerful new editing suite in the Google+ app for iPhone and iPad. With these Snapseed-inspired tools you can crop, rotate, add filters and 1-tap enhancements like Drama, Retrolux, and HDR Scape, and more. Add a personal touch to your photos, then easily share them with friends and family. As an added bonus: you can start editing on one device, continue on another, and revert to your originals at any time!

The overall workflow is a work in progress (e.g. right now you don’t get an interface for re-editing your adjustments), but stay tuned: we’re starting to cook with gas.

For years at Adobe I’d joke, “If we’d come up with the idea for Instagram, we still wouldn’t have shipped it, because we’d still be debating, ‘Hmm—do you think we need 16 sliders per filter… or is it more like 32?’ The idea of no sliders at all (which makes people feel more confident, because you can’t feel too responsible for getting things really ‘right.'”

Well, there goes that joke: Instagram 6.0 (coming out today) adds the ability to fade filters plus make 9 or so adjustments.

If the new effects feel a bit buried in the editing flow, that’s the point. Systrom tells me “I believe that flexibility and simplicity are often at odds.” So instead of cramming the features into the main composition flow, they’re hidden behind the wrench so hardcore users can dig them out, but they don’t complicate things for casual users.

It’ll be interesting to see how people respond to these.

A good, quick tour of some fundamentals—plus the often-forgotten (by me) On-Image Adjustment Tool:

[YouTube]

My old Adobe colleague Sylvain Paris & a team at MIT have long worked on enabling style transfer among images (see demos from 2010 & last year), and now they’re demonstrating new progress in a new SIGGRAPH paper:

Using off-the-shelf face recognition software, they first identify a portrait, in the desired style, that has characteristics similar to those of the photo to be modified. “We then find a dense correspondence — like eyes to eyes, beard to beard, skin to skin — and do this local transfer,” Shih explains.

Check out this quick demo, including the ability to apply style to video (provided it’s a headshot):

Neat stuff. [YouTube]

Paul Trillo worked with Nokia to rig up 50 Lumia 1020 cameras & capture dizzying slices of New York life, complete with snatches of audio.

https://www.youtube.com/watch?v=LmDNjZM3Tu8

Here’s a peek behind the scenes:

https://www.youtube.com/watch?v=hHVkNcvxMZM

Elsewhere Jonas Ginter made a 360º tiny planet using an array of six GoPro cameras:

Watching this I couldn’t help wishing that SNL’s Stefon was there to chronicle New York’s hottest new club, Whoooosh!, currently descending onto a barge in the East River…

Can you turn a square Instagram pic into an interesting landscape? Bryan O’Neil Hughes shows you how:

[YouTube]

“It’s not ‘bullshit,’ but rather ‘male bovine fecal matter extruded on a longitudinal axis.’”

Enjoy. 🙂

[Via]

If you’re like me (and most people), you take a trip, take a bunch of photos & videos, never really go through them, think “Oh, I really should make/share a gallery or something,” and then fail to do so—maybe feeling vaguely guilty about it.

Google+ Stories changes that.

My boss Anil writes,

No more sifting through photos for your best shots, racking your brain for the sights you saw, or letting your videos collect virtual dust. We’ll just gift you a story after you get home. This way you can relive your favorite moments, share them with others, and remember why you traveled in the first place.

Here’s a sample story made from Anil’s family photos. My colleague Ben says,

“We’ve added not just the photos and videos but the travel information, places and restaurants you went to along the way,” says Google + product manager Ben Eidelson. “We’ve given this all to you automatically when you’ve gotten back from whatever you’re doing so you don’t have to stress about that on top of doing your laundry and unpacking.”

Here’s a nice summary from Ben & USA Today’s Jefferson Graham:

“Is Nik dead?”

Nope, not even a little. 🙂

Google’s photography evangelist, Brian Matiash, joined Scott Kelby & Matt Kloskowski to present the new Analog Efex Pro on last week’s episode of The Grid. Check out the new stuff in action as Brian answers questions from the audience. (You can jump ahead to around 17:20 in case the embed below doesn’t do that automatically.)

[YouTube]

Gannon Burgett writes in PetaPixel,

After spending some time with the program, it seems as though Analog Efex Pro II is a great deal speedier than its predecessor. Not only is speed improved though, it offers a much more diverse array of filter options and far more precision in terms of nailing the toning of an image, adding grain, etc.

Overall, it’s a rather impressive improvement and while I was admittedly skeptical at first, it’s most certainly worthy of calling itself 2.0.

My new team is constantly working to polish the Google+ Photos experience. Recently we’ve introduced three small enhancements that make it easier to find, manage, and download your files:

As I say, the team is constantly cranking away, so let us know what else you’d like to see!

I’m delighted to announce that Google has just released Analog Efex Pro 2.0 for Mac & Windows, a big free update to the Nik Collection. I think you’re going to love the way you can sculpt blurs, make cool diptychs & triptychs, create really interesting double exposures, and more.

- New control points – Delivering one of the most requested features by our users, control points let you fine-tune the presence of Photo Plates, Light Leaks, the Dirt & Scratches filter, and Basic Adjustments using our U Point® Technology.

- New Cameras and Presets – Expanding on the Cameras from the previous version, you now have access to a larger assortment of new Cameras and presets that take advantage of the powerful filters of Analog Efex Pro 2, such as Black and White, Subtle Bokeh, and Simple Color.

- New creative ways to present your images – We’ve also built three new filters into the Camera Kit, that let you showcase your photos in truly creative ways with Motion Blur, Multilens, and Double Exposure.

You can download the update immediately via the Try Now button. (It’ll recognize your license if you’ve already bought the collection.)

Meanwhile, check out my colleague Brian Matiash putting AEP through its paces in this series:

Enjoy, and please let us know what you think!

[YouTube]

We’ll be showing something cool on Kelby TV’s The Grid (broadcasting live at 4pm Eastern/1pm Pacific). Stay tuned!

Huge photography, hugely important subject:

Photographer Edward Burtynsky and director Jennifer Baichwal give us an inside look into the making of their cinematic feat, Watermark. The documentary was shot using groundbreaking 5K ultra high definition photography and aerial technology and explores mankind’s complicated relationship with water, using a diverse set of stories that challenge how easily we take it for granted.

[Via]

I really enjoyed hearing the enigmatic street photographer’s old neighbors remember her—and hearing how the remembrance brought them together.

Though it veers a bit close to fetishizing materials, I found this short film introducing a new notebook line compelling:

Thanks to the natural texture of the wood, no two “Shelterwood” memo books are the same but all share their origin in the same few hand-picked cherry trees from Northern Illinois and Southern Wisconsin. The wood covers are sustainably produced, with just a few 60″ logs converted into 5000 feet of “Sheer Veneer,” with very little waste (the waste is recycled into wood pellets to heat the factory!). The process can be seen in the film above.

[Vimeo]

New iPhone app Vhoto scans your videos to find (hopefully) amazing still photographs: “Use the Vhoto camera to record video, or import your existing videos. Vhoto’s Chooser looks at hundreds of still photos hidden inside your videos and finds you the best pics.”

https://www.youtube.com/watch?v=lsELdVAlaU4

GeekWire has a few more details.

Thoughts? I’m reminded of this paper from Adobe’s Aseem Agarwala & UW researchers on selecting candid portraits from video.

[YouTube]

You can now view a given point in space at multiple points in time—useful for things like the Washington Post showing the rapid development of parts of DC.

If you’ve ever dreamt of being a time traveler like Doc Brown, now’s your chance. Starting today, you can travel to the past to see how a place has changed over the years by exploring Street View imagery in Google Maps for desktop. We’ve gathered historical imagery from past Street View collections dating back to 2007 to create this digital time capsule of the world.

I… I don’t even.

Wow.

Because, gravity.

Seems like a great way to end up on Tosh.0—or Faces of Death.

[Vimeo] [Via Dave Werner]

Russell Brown, let’s burn this candle:

[Vimeo]

Google + Adobe = photographic coolness; seems rather up my alley, eh? Via PetaPixel:

The tutorial will show you how to take a RAW file, prep it in Lightroom, convert it to black and white in Silver Efex Pro 2, and then finish it up back in Lightroom. And although it’s meant specifically for images shot with the Fuji X-T1, it’ll give anybody who wants that grainy, filmic black and white look — which is particularly well suited for street photography — a great place to start.

If this is up your alley, check out more tutorials on the Google Help Center.

[Vimeo]

If you care about the future of photography—how it’s captured, processed, experienced—check out David Pierce’s thoughtful piece on Lytro’s new Illum camera.

What Lytro plans to do is not just change how we take pictures, but to make the very pictures themselves mirror the way we see them in the first place. We interact with the world in three dimensions, touching and moving and constantly changing our perspectives, and light-field photos fit perfectly.

Photographer Kyle Thompson (who at 22 is kind of a badass) notes, “You don’t have to focus exactly on one certain point in the photo, but you also have to remember to try and keep all points of the photo interesting.”

This is the future… [L]ight-field photography — the notion that the future is about turning the complex physical parts of a camera into software and algorithms… — seems almost obvious. Why capture one photo, from one angle, with one perspective, when we could capture everything? When I can explore a photo, zooming and panning and focusing and shifting, why would I ever want to just look at it?

What do you think? Gimmick or game changer?

Lytro-like refocusing, Photo Sphere creation, and more are available in the new Google Camera app:

Lens Blur lets you change the point or level of focus after the photo is taken. You can choose to make any object come into focus simply by tapping on it in the image. By changing the depth-of-field slider, you can simulate different aperture sizes, to achieve bokeh effects ranging from subtle to surreal (e.g., tilt-shift). The new image is rendered instantly, allowing you to see your changes in real time.

You can go nerd out on the Google Research blog. [Via]